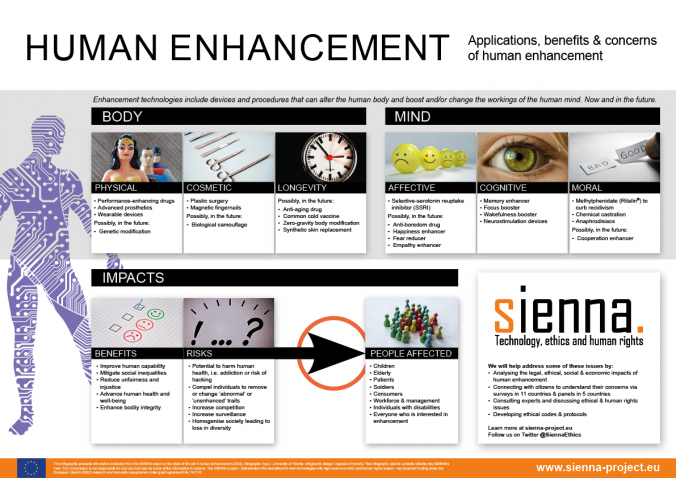

Perhaps you also dream about being more than you are: faster, better, bolder, stronger, smarter, and maybe more attractive? Until recently, technology to improve and enhance our abilities was mostly science fiction, but today we can augment our bodies and minds in a way that challenges our notions of normal and abnormal. Blurring the lines between treatments and enhancements. Very few scientists and companies that develop medicines, prosthetics, and implants would say that they are in the human enhancement business. But the technologies they develop still manage to move from one domain to another. Our bodies allow for physical and cosmetic alterations. And there are attempts to make us live longer. Our minds can also be enhanced in several ways: our feelings and thoughts, perhaps also our morals, could be improved, or corrupted.

We recognise this tension from familiar debates about more common uses of enhancements: doping in sports, or students using ADHD medicines to study for exams. But there are other examples of technologies that can be used to enhance abilities. In the military context, altering our morals, or using cybernetic implants could give us ‘super soldiers’. Using neuroprostheses to replace or improve memory that was damaged by neurological disease would be considered a treatment. But what happens when it is repurposed for the healthy to improve memory or another cognitive function?

There have been calls for regulation and ethical guidance, but because very few of the researchers and engineers that develop the technologies that can be used to enhance abilities would call themselves enhancers, the efforts have not been very successful. Perhaps now is a good time to develop guidelines? But what is the best approach? A set of self-contained general ethical guidelines, or is the field so disparate that it requires field- or domain-specific guidance?

The SIENNA project (Stakeholder-Informed Ethics for New technologies with high socio-ecoNomic and human rights impAct) has been tasked with developing this kind of ethical guidance for Human Enhancement, Human Genetics, Artificial Intelligence and Robotics, three very different technological domains. Not surprising, given the challenges to delineate, human enhancement has by far proved to be the most challenging. For almost three years, the SIENNA project mapped the field, analysed the ethical implications and legal requirements, surveyed how research ethics committees address the ethical issues, and proposed ways to improve existing regulation. We have received input from stakeholders, experts, and publics. Industry representatives, academics, policymakers and ethicists have participated in workshops and reviewed documents. Focus groups in five countries and surveys with 11,000 people in 11 countries in Europe, Africa, Asia, and the Americas have also provided insight in the public’s attitudes to using different technologies to enhance abilities or performance. This resulted in an ethical framework, outlining several options for how to approach the process of translating this to practical ethical guidance.

The framework for human enhancement is built on three case studies that can bring some clarity to what is at stake in a very diverse field; antidepressants, dementia treatment, and genetics. These case studies have shed some light on the kinds of issues that are likely to appear, and the difficulties involved with the complex task of developing ethical guidelines for human enhancement technologies.

A lot of these technologies, their applications, and enhancement potentials are in their infancy. So perhaps this is the right time to promote ways for research ethics committees to inform researchers about the ethical challenges associated with human enhancement. And encouraging them to reflect on the potential enhancement impacts of their work in ethics self-assessments.

And perhaps it is time for ethical guidance for human enhancement after all? At least now there is an opportunity for you and others to give input in a public consultation in mid-January 2021! If you want to give input to SIENNA’s proposals for human enhancement, human genomics, artificial intelligence, and robotics, visit the website to sign up for news www.sienna-project.eu.

The public consultation will launch on January 11, the deadline to submit a response is January 25, 2021.

Written by…

Josepine Fernow, Coordinator at the Centre for Research Ethics & Bioethics (CRB), and communications leader for the SIENNA project.

Recent Comments