As I wrote in previous posts on this blog, the discussion about the possibility of engineering an artificial form of consciousness is growing along with the impressive advances of artificial intelligence (AI). Indeed, there are many questions arising from the prospect of an artificial consciousness, including its conceivability and its possible ethical implications. We deal with these kinds of questions as part of a EU multidisciplinary project, which aims to advance towards the development of artificial awareness.

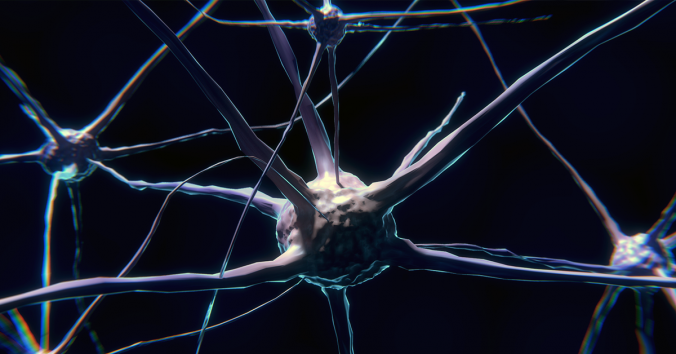

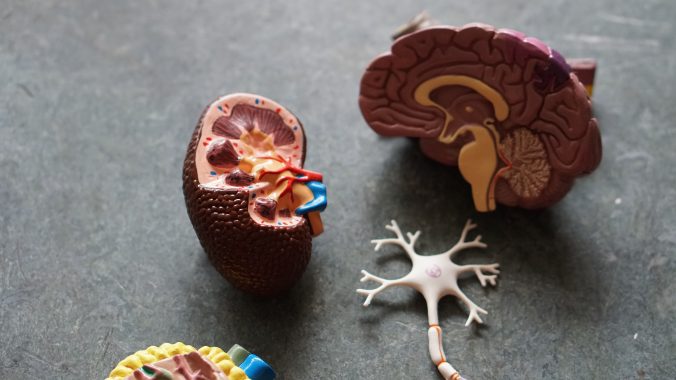

Here I want to describe the kind of approach to the issue of artificial consciousness that I am inclined to consider the most promising. In a nutshell, the research strategy I propose to move forward in clarifying the empirical and theoretical issues of the feasibility and the conceivability of artificial consciousness, consists in starting from the form of consciousness we are familiar with (biological consciousness) and from its correlation with the organ that science has revealed is crucial for it (the brain).

In a recent paper, available as a pre-print, I analysed the question of the possibility of developing artificial consciousness from an evolutionary perspective, taking the evolution of the human brain and its relationship to consciousness as a benchmark. In other words, to avoid vague and abstract speculations about artificial consciousness, I believe it is necessary to consider the correlation between brain and consciousness that resulted from biological evolution, and use this correlation as a reference model for the technical attempts to engineer consciousness.

In fact, there are several structural and functional features of the human brain that appear to be key for reaching human-like complex conscious experience, which current AI is still limited in emulating or accounting for. Among these are:

- massive biochemical and neuronal diversity

- long period of epigenetic development, that is, changes in the brain’s connections that eventually change the number of neurons and their connections in the brain network as a result of the interaction with the external environment

- embodied sensorimotor experience of the world

- spontaneous brain activity, that is, an intrinsic ability to act which is independent of external stimulation

- autopoiesis, that is, the capacity to constantly reproduce and maintain itself

- emotion-based reward systems

- clear distinction between conscious and non-conscious representations, and the consequent unitary and specific properties of conscious representations

- semantic competence of the brain, expressed in the capacity for understanding

- the principle of degeneracy, which means that the same neuronal networks may support different functions, leading to plasticity and creativity.

These are just some of the brain features that arguably play a key role for biological consciousness and that may inspire current research on artificial consciousness.

Note that I am not claiming that the way consciousness arises from the brain is in principle the only possible way for consciousness to exist: this would amount to a form of biological chauvinism or anthropocentric narcissism. In fact, current AI is limited in its ability to emulate human consciousness. The reasons for these limitations are both intrinsic, that is, dependent on the structure and architecture of AI, and extrinsic, that is, dependent on the current stage of scientific and technological knowledge. Nevertheless, these limitations do not logically exclude that AI may achieve alternative forms of consciousness that are qualitatively different from human consciousness, and that these artificial forms of consciousness may be either more or less sophisticated, depending on the perspectives from which they are assessed.

In other words, we cannot exclude in advance that artificial systems are capable of achieving alien forms of consciousness, so different from ours that it may not even be appropriate to continue to call it consciousness, unless we clearly specify what is common and what is different in artificial and human consciousness. The problem is that we are limited in our language as well as in our thinking and imagination. We cannot avoid relying on what is within our epistemic horizon, but we should also avoid the fallacy of hasty generalization. Therefore, we should combine the need to start from the evolutionary correlation between brain and consciousness as a benchmark for artificial consciousness, with the need to remain humble and acknowledge the possibility that artificial consciousness may be of its own kind, beyond our view.

Written by…

Michele Farisco, Postdoc Researcher at Centre for Research Ethics & Bioethics, working in the EU Flagship Human Brain Project.

Approaching future issues

Recent Comments