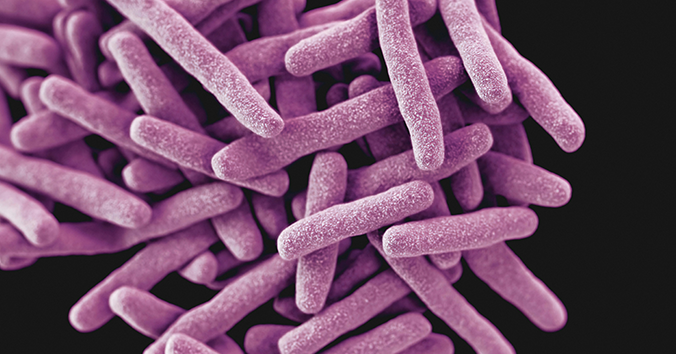

Most people are probably aware that antimicrobial resistance is one of the major threats to global health. When microorganisms develop resistance to antibiotics, more people become seriously ill from common infections and more people will die from them. It is like an arms race. By using antibiotics to defend ourselves against infections, we speed up the development of resistance. Since we need to be able to defend ourselves against infections, antibiotics must be used more responsibly so that the development of resistance is slowed down.

However, few of us are equally aware that food production also contributes significantly to the development of antimicrobial resistance. In animal husbandry around the world, large amounts of antibiotics are used to defend animals against infections. The development of resistance is accelerated to a large extent here, when the microorganisms that survive the antibiotics multiply and spread. In addition, antibiotics from animal husbandry can leek out and further accelerate the development of antimicrobial resistance in an antibiotic-contaminated environment. Greater responsibility is therefore required, not least in the food sector, for better animal husbandry with reduced antibiotic use.

Unfortunately, the actors involved do not seem to feel accountable for the accelerated development of antimicrobial resistance. There are so many actors in the food chain: policymakers in different areas, producers, retailers and consumers. When so many different actors have a common responsibility, it is easy for each actor to hold someone else responsible. A new article (with Mirko Ancillotti at CRB as one of the co-authors) discusses a possibility for how this standstill where no one feels accountable can be broken: by empowering consumers to exercise the power they actually have. They are not as passive as we think. On the contrary, through their purchasing decisions, and by communicating their choices in various ways, consumers can put pressure on other consumers as well as other actors in the food chain. They may demand more transparency and better animal husbandry that is not as dependent on antibiotics.

However, antimicrobial resistance is often discussed from a medical perspective, which makes it difficult for consumers to see how their choices in the store could affect the development of resistance. By changing this and empowering consumers to make more aware choices, they could exercise their power as consumers and influence all actors to take joint responsibility for the contribution of food production to antimicrobial resistance, the authors argue. The tendency to shift responsibility to someone else can be broken if consumers demand transparency and responsibility through their purchasing decisions. Policymakers, food producers, retailers and consumers are incentivized to work together to slow the development of antimicrobial resistance.

The article discusses the issue of accountability from a theoretical perspective that can motivate interventions and empirical studies. Read the article here: Antimicrobial resistance and the non-accountability effect on consumers’ behaviour.

Written by…

Pär Segerdahl, Associate Professor at the Centre for Research Ethics & Bioethics and editor of the Ethics Blog.

Nordvall, A-C., Ancillotti, M., Oljans, E., Nilsson, E. (2025). Antimicrobial resistance and the non-accountability effect on consumers’ behaviour. Social Responsibility Journal. DOI: 10.1108/SRJ-12-2023-0721

Approaching future issues

Recent Comments