“Consciousness” is an ambiguous concept that arouses the interest of people with different expertise, including the general public. This situation naturally creates several related ambiguities, for example about how consciousness should be understood scientifically and how we can explain it. Not least, it creates uncertainties about how we can translate the scientific knowledge we have about consciousness to the clinics.

How do we best develop our understanding of consciousness and how do we make current knowledge in the field practically useful? In an article recently published in Neuroscience & Biobehavioral Reviews, we propose a model that combines theoretical reflection, empirical research, ethical analysis, and clinical translation. Our article, Advancing the science of consciousness: from ethics to clinical care, starts from the fundamental question of how to translate significant advances in the neurobiological study of consciousness into clinical settings. A first step towards answering this question is to identify the obstacles that need to be overcome. We focus on two main obstacles: the lack of a generally agreed-upon working definition of consciousness, and the lack of consensus on how to identify reliable markers that indicate the presence of consciousness.

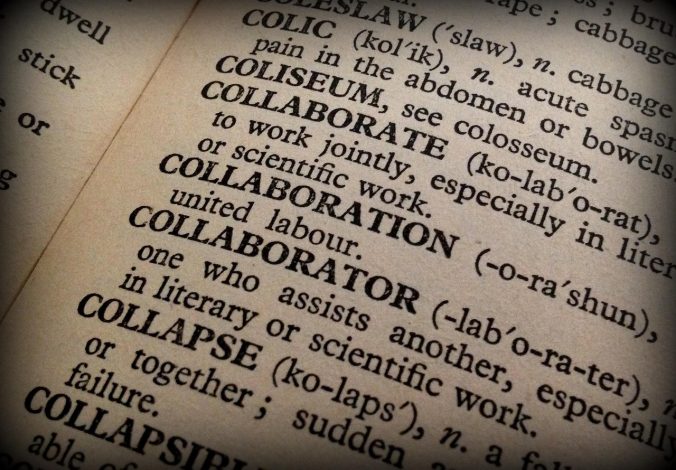

The article is the result of a multi-year collaboration between experts from various fields, including philosophy, ethics, medicine, clinical, cognitive, and computational neuroscience, as well as representatives of patient associations. The research described in the article focuses on disorders of consciousness (DoCs), that is, the impaired mental condition of patients with traumatic or non-traumatic brain injuries. The prevalence of this severe medical condition is quite high, the rate of misdiagnosis is fairly alarming, and treatment options are still limited.

Following a traumatic or non-traumatic brain injury, the patient may enter into a state of coma where they are completely unresponsive and lack the two main clinical dimensions of consciousness: wakefulness (related to the level of consciousness) and awareness (related to the content of consciousness). In the article, we leave aside the big controversy about the definition of consciousness and propose that a clinically useful choice is to treat consciousness as a combination of wakefulness and awareness. This pragmatic choice will allow us to improve the clinical treatment of patients with DoCs and, consequently, their well-being.

We further describe behavioral, physiological, and computational markers and measures that recent research indicates are very promising for formulating more precise and reliable diagnoses of various disorders of consciousness. Such a combination of approaches is recommended in the international guidelines on DoCs to reduce the still too high rate of misdiagnoses. Yet, there are still concerns about whether the available measures are effective and whether they cover the full spectrum of consciousness. Therefore, researchers are striving to identify additional approaches and indicators. In the article, we propose that patients’ ability to perceive illusions and respond accordingly can be used to assess their capacity for conscious experience. We also propose that virtual reality can be used to detect residual consciousness and improve interaction with patients affected by DoCs.

Technological advances alone cannot improve the current state of consciousness science. To identify the most effective strategies for translating scientific findings into better healthcare, technological advances must be combined with ethical reflection. The ethical issues related to DoCs are numerous. In the article, we focus on some of them to illustrate the need for continued dialogue between different disciplines and stakeholders, including researchers, clinicians, and patient representatives. We analyze, among other things, misdiagnosis, as well as the risk that by using “healthy” consciousness as the norm for what consciousness is, we may neglect the possibility that patients with DoCs retain forms of consciousness that do not conform to the norm. We also analyze uncertainties about how these patients are classified, as well as the need for better involvement of family members, for example through improved communication and information exchange about the patients’ condition that can help clinicians make the most appropriate decisions. Furthermore, we analyze the promise of neurorehabilitation and neuropalliative care for these patients. Since the inspiration of our ethical reflection in the article is pragmatic and action-oriented, we conclude by proposing an actionable model that clearly identifies and assigns specific responsibilities to different actors (such as institutions, researchers, clinicians, and family members).

While we cannot claim to resolve all relevant issues, the collaboration behind this article can serve as a model for how to approach the challenges. A multidisciplinary, multi-perspective approach involving different disciplines and stakeholders is needed to improve the prognosis and quality of life for patients with disorders of consciousness. It is needed also to empower family members with the knowledge and capacity they need to participate in the clinical care of their loved ones.

Finally, our article is defined as a “live paper,” because the reader can access a number of interactive tools online on the research platform Ebrains, including datasets, computational models, and figures.

Written by…

Michele Farisco, Postdoc Researcher at Centre for Research Ethics & Bioethics, working in the EU Flagship Human Brain Project.

Michele Farisco, Kathinka Evers, Jitka Annen, et al. Advancing the science of consciousness: from ethics to clinical care, Neuroscience & Biobehavioral Reviews, Volume 180, 2026, https://doi.org/10.1016/j.neubiorev.2025.106497

We transcend disciplinary borders