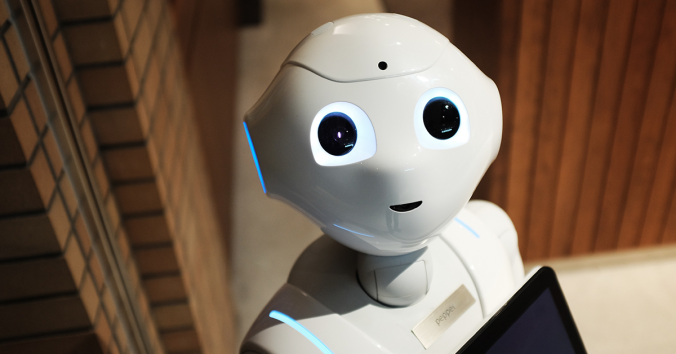

Debates about the possibility that artificial systems can develop the capacity for subjective experiences are becoming increasingly common. Indeed, the topic is fascinating and the discussion is gaining interest also from the public. Yet the risk of ideological and imaginative rather than scientific and rational reflections is quite high. Several factors make the idea of engineering subjective experience, such as developing sensitive robots, either very attractive or extremely frightening. How can we avoid getting stuck in either of these, in my opinion, equally unfortunate extremes? I believe we need a balanced and “pragmatic” analysis of both the logical conceivability and the technical feasibility of artificial consciousness. This is what we are trying to do at CRB within the framework of the CAVAA project.

In this post, I want to illustrate what I mean by a pragmatic analysis by summarizing an article I recently wrote together with Kathinka Evers. We review five strategies that researchers in the field have developed to address the issue whether artificial systems may have the capacity for subjective experiences, either positive experiences such as pleasure or negative ones such as pain. This issue is challenging when it comes to humans and other animals, but becomes even more difficult for systems whose nature, architecture, and functions are very different from our own. In fact, there is an additional challenge that may be particularly tricky when it comes to artificial systems: the gaming problem. The gaming problem has to do with the fact that artificial systems are trained with human-generated data to reflect human traits. Functional and behavioral markers of sentience are therefore unreliable and cannot be considered evidence of subjective experience.

We identify five strategies in the literature that may be used to face this challenge. A theory-based strategy starts from selected theories of consciousness to derive relevant indicators of sentience (structural or functional features that indicate conscious capacities), and then checks whether artificial systems exhibit them. A life-based strategy starts from the connection between consciousness and biological life; not to rule out that artificial systems can be conscious, but to argue that they must be alive in some sense in order to possibly be conscious. A brain-based strategy starts from the features of the human brain that we have identified as crucial for consciousness to then check whether artificial systems possess them or similar ones. A consciousness-based strategy searches for other forms of biological consciousness besides human consciousness, to identify what (if anything) is truly indispensable for consciousness and what is not. In this way, one aims to overcome the controversy between the many theories of consciousness and move towards identifying reliable evidence for artificial consciousness. An indicator-based strategy develops a list of indicators, features that we tend to agree characterize conscious experience, and which can be seen as indicative (probabilistic rather than definitive evidence) of the presence of consciousness in artificial systems.

In the article we describe the advantages and disadvantages of the five strategies above. For example, the theory-based strategy has the advantage of a broad base of empirically validated theories, but it is necessarily selective with respect to which theories individual proponents of the strategy draw upon. The life-based approach has the advantage of starting from the well-established fact that all known examples of conscious systems are biological, but it can be interpreted as ruling out, from the outset, the possibility of alternative forms of AI consciousness beyond the biological ones. The brain-based strategy has the advantage of being based on empirical evidence about the brain bases of consciousness. It avoids speculation about hypothetical alternative forms of consciousness, and it is pragmatic in the sense that it translates into specific approaches to testing machine consciousness. However, because the brain-based approach is limited to human-like forms of consciousness, it may lead to overlooking alternative forms of machine consciousness. The consciousness-based strategy has the advantage of avoiding anthropomorphic and anthropocentric temptations to assume that the human form of consciousness is the only possible one. One of the shortcomings of the consciousness-based approach is that it risks addressing a major challenge (identifying AI consciousness) by taking on a possibly even greater challenge (providing a comparative understanding of different forms of consciousness in nature). Finally, the indicator-based strategy has the advantage of relying on what we tend to agree characterizes conscious activity, and of remaining neutral in relation to specific theories of consciousness: it is compatible with different theoretical accounts. Yet it has the drawback that it is developed with reference to biological consciousness, so its relevance and applicability to AI consciousness may be limited.

How can we move forward towards a good strategy for addressing the gaming problem and reliably assess subjective experience in artificial systems? We suggest that the best approach is to combine the different strategies described above. We have two main reasons for this proposal. First, consciousness has different dimensions and levels: combining different strategies increases the chances of covering this complexity. Second, to address the gaming problem, it is crucial to look for as many indicators as possible, from structural to architectural, from functional to indicators related to social and environmental dimensions.

The question of sentient AI is fascinating, but an important reason why the question engages more and more people is probably that the systems are trained to reflect human traits. It is so easy to imagine AI with feelings! Personally, I find it at least as fascinating to contribute to a scientific and rational approach to this question. If you are interested in reading more, you can find the preprint of our article here: Is it possible to identify phenomenal consciousness in artificial systems in the light of the gaming problem?

Written by…

Michele Farisco, Postdoc Researcher at Centre for Research Ethics & Bioethics, working in the EU Flagship Human Brain Project.

Farisco, M., & Evers, K. (2025). Is it possible to identify phenomenal consciousness in artificial systems in the light of the gaming problem? https://doi.org/10.5281/zenodo.17255877

We like challenging questions