When the coronavirus began to spread outside China a year ago, the Director General of the World Health Organization said that we are not only fighting an epidemic, but also an infodemic. The term refers to the rapid spread of often false or questionable information.

While governments fight the pandemic through lockdowns, social media platforms such as Facebook, Twitter and YouTube fight the infodemic through other kinds of lockdowns and framings of information considered as misinformation. Content can be provided with warning signs and links to what are considered more reliable sources of information. Content can also be removed and in some cases accounts can be suspended.

In an article in EMBO Reports, Emilia Niemiec asks if there are wiser ways to handle the spread of medical misinformation than by letting commercial actors censor the content on their social media platforms. In addition to the fact that censorship seems to contradict the idea of these platforms as places where everyone can freely express their opinion, it is unclear how to determine what information is false and harmful. For example, should researchers be allowed to use YouTube to discuss possible negative consequences of the lockdowns? Or should such content be removed as harmful to the fight against the pandemic?

If commercial social media platforms remove content on their own initiative, why do they choose to do so? Do they do it because the content is scientifically controversial? Or because it is controversial in terms of public opinion? Moreover, in the midst of a pandemic with a new virus, the state of knowledge is not always as clear as one might wish. In such a situation it is natural that even scientific experts disagree on certain important issues. Can social media companies then make reasonable decisions about what we currently know scientifically? We would then have a new “authority” that makes important decisions about what should be considered scientifically proven or well-grounded.

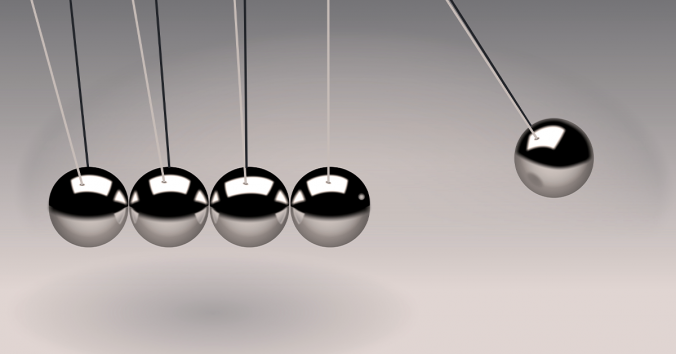

Emilia Niemiec suggests that a wiser way to deal with the spread of medical misinformation is to increase people’s knowledge of how social media works, as well as how research and research communication work. She gives several examples of what we may need to learn about social media platforms and about research to be better equipped against medical misinformation. Education as a vaccine, in other words, which immunises us against the misinformation. This immunisation should preferably take place as early as possible, she writes.

I would like to recommend Emilia Niemiec’s article as a thoughtful discussion of issues that easily provoke quick and strong opinions. Perhaps this is where the root of the problem lies. The pandemic scares us, which makes us mentally tense. Without that fear, it is difficult to understand the rapid spread of unjustifiably strong opinions about facts. Our fear in an uncertain situation makes us demand knowledge, precisely because it does not exist. Anything that does not point in the direction that our fear demands immediately arouses our anger. Fear and anger become an internal mechanism that, at lightning speed, generates hardened opinions about what is true and false, precisely because of the uncertainty of the issues and of the whole situation.

So I am dreaming of one further vaccine. Maybe we need to immunise ourselves also against the fear and the anger that uncertainty causes in our rapidly belief-forming intellects. Can we immunise ourselves against something as human as fear and anger in uncertain situations? In any case, the thoughtfulness of the article raises hopes about it.

Written by…

Pär Segerdahl, Associate Professor at the Centre for Research Ethics & Bioethics and editor of the Ethics Blog.

Niemiec, Emilia. 2020. COVID-19 and misinformation: Is censorship of social media a remedy to the spread of medical misinformation? EMBO Reports, Vol. 21, no 11, article id e51420

We recommend readings

Recent Comments